The Drop in Search Console Impressions and &num=100 Parameter Explained

Last week Google made a silent change that has caused a ripple effect through the SEO community. By removing support for the &num=100 parameter, ranking tools began to fail and there was a noticeable decline in Google Search Console (GSC) impressions.

So what’s actually happened?

On the 11th September, users on X started reporting that the &num=100 parameter was only working around 50% of the time. What exactly is the &num=100 parameter? By adding this parameter to the end of your search URL, you could instruct Google to return 100 result listings per page, rather than the default 10.

This meant it was used by a lot of rank tracking tools, to scrape the top 100 results with a single request.

At first it was believed that this may be some sort of test being run by Google, due to the 50/50 success rate, but over the weekend the parameter stopped functioning altogether, causing multiple ranking tools to fail.

Why is this impacting Search Console data?

Well, it shows that a lot of traffic being reported in GSC wasn’t coming from real human searches – it was actually bot traffic from the rank tracking tools. Without the bot views on results in position 11-100, impressions have dropped significantly and that is the data now “missing” from GSC. For this reason as well, we’re seeing an increase in average ranking position in a lot of reports, as real users seldom click through onto page 2 or beyond.

Why has Google made this change?

We can’t be certain as to why Google made this change as it came with no warning and, as yet, there hasn’t been any official comment or blog post confirming it. It’s possible that this update is an attempt to make it harder for LLMs such as OpenAI (aka ChatGPT) to scrap the SERPs and use Google search data to train their models. Others have suggested it may also be in response to the Great Decoupling debate, and the impact of Google’s own AI overviews on impression and click trends.

What should you do?

Firstly it’s worth noting that this isn’t a bad thing. Your performance hasn’t actually changed and this isn’t an algorithm update that you need to worry about. Instead, we’re now getting a cleaner view of real user traffic – which is a good thing when it comes to using that data to make business decisions.

If you have stakeholders in your business that monitor impression data religiously, then make sure they’re aware of this update and understand the reasons why it’s impacting the reported metrics.

Then as always, keep an eye on your real KPIs. If clicks are stable / growing, which we’re still seeing for most of our clients in their GSC dashboards, and your on-site metrics are still strong, then there’s nothing you need to worry about.

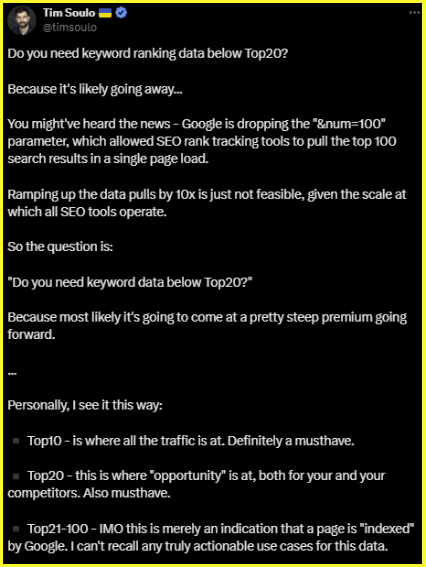

When it comes to monitoring your keyword rankings going forwards, the big tools such as SEMrush and Aherfs, have already published updates and are of course monitoring the situation closely to see how they can adapt. Tim Soulo, the CMO of Aherfs, has advised considering how much data you really need, and we have to agree with his sentiment – for most businesses monitoring the top 20 results is more than enough. Anything more, and you’re going to have to start weighing up the cost benefit, as the data will soon come with a premium price tag:

It’s of course early days for this update and we’ll be continuing to monitor the impact. For now we recommend staying focused on your overarching strategy and continue to build data measurement frameworks that rely on your own 1st party data as much as possible, with careful consideration given to what additional data you really need.

Concerned about your SEO traffic or have any questions on this update? Contact us here

Leave a comment